Action Verb Corpus (AVC)

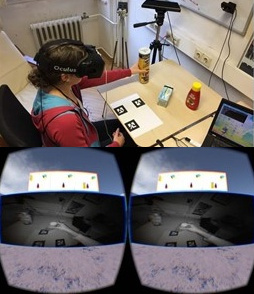

The Action Verb Corpus comprises multimodal data of 46 episodes (recordings) conducted by 12 humans with in total 390 instances of simple actions — take, put, and push. Recorded are audio, video and motion data (hand and arm) while participants perform an action and describe what they do.

Publications

Details about the full data set and how the data was collected can be found in Stephanie Gross, Matthias Hirschmanner, Brigitte Krenn, Friedrich Neubarth, Michael Zillich: Action Verb Corpus. LREC 2018.

Authors

- Brigitte Krenn

- Stephanie Gross

- Friedrich Neubarth

- Matthias Hirschmanner

- Michael Zillich

Licence

Sponsors

Human Language Understanding: Grounding Language Learning (HLU), Call 2014 – № 2287-N35

Key facts

- Version

1.0 - Release date

31 January 2018 - DOI

10.5281/zenodo.5140014 - Language

German - Modality

Multimodal - Licence

CC BY 4.0 - Associated projects

RALLI, ATLANTIS - Contact

Brigitte Krenn