Human Tutoring of Robots in Industry

The project Human Tutoring of Robots in Industry investigates requirements from industrial companies to augment their robots with artificial intelligence for efficient human-robot interaction. We research possibilities to adapt an artificial system that learns new actions and objects through observation and their natural language descriptions by a human tutor to the industrial context. To enable successful worker-robot interaction, however, it is also necessary to research factors influencing workers well-being, perception of control etc. In the project, we build on results from the project RALLI on robot action and word learning through observation and natural language descriptions. Coming from basic research, the idea of the proposed project is to investigate up-to-date requirements on human-robot interaction of stakeholders from industries employing robots in their manifacturing process.

Through interviews and online surveys, our system will be adapted to the industrial context, including (i) requirements from the management level of industries employing industrial robots, and (ii) requirements from workers interacting with industrial robots. Partial results are implemented in URSim, focusing on non-verbal apsects of worker-cobot interaction.

Summing up, the practical results show the individual importance of systems for worker-cobot interaction. In this respect we offer consultancy for interested companies.

Publications

-

Hirschmanner, M., Gross, S., Zafari, S., Krenn, B., Neubarth, F., & Vincze, M. (2021, August). Investigating Transparency Methods in a Robot Word-Learning System and Their Effects on Human Teaching Behaviors. In 2021 30th IEEE International Conference on Robot & Human Interactive Communication (RO-MAN) (pp. 175-182). IEEE.

-

Krenn, B., Gross, S., Dieber, B., Pichler, H., Meyer, K. (2021). A proxemics game between festival visitors and an industrial robot. Workshop on Exploring Applications for Autonomous Non-verbal Human-Robot Interaction at HRI 2021, Boulder, Co.

-

Krenn, B., Reinboth, T., Gross, S., Busch, C., Mara, M., Meyer, K., Heiml, M., Layer-Wagner, T. (2021). It's your turn! - A collaborative human-robot pick-and-place scenario in a virtual industrial setting. Workshop on Exploring Applications for Autonomous Non-verbal Human-Robot Interaction at HRI 2021, Boulder, Co.

Press coverage

- Interview of Stephanie Gross in an episode of matrix, ORF Radio Ö1's weekly show on new technologies on April 23rd, 2021. The episode, entitled "Hallo, spricht hier ein Bot?", investigates the roles and capabilities of voice assistants from social robots to shakespeare automats. Link

Gallery

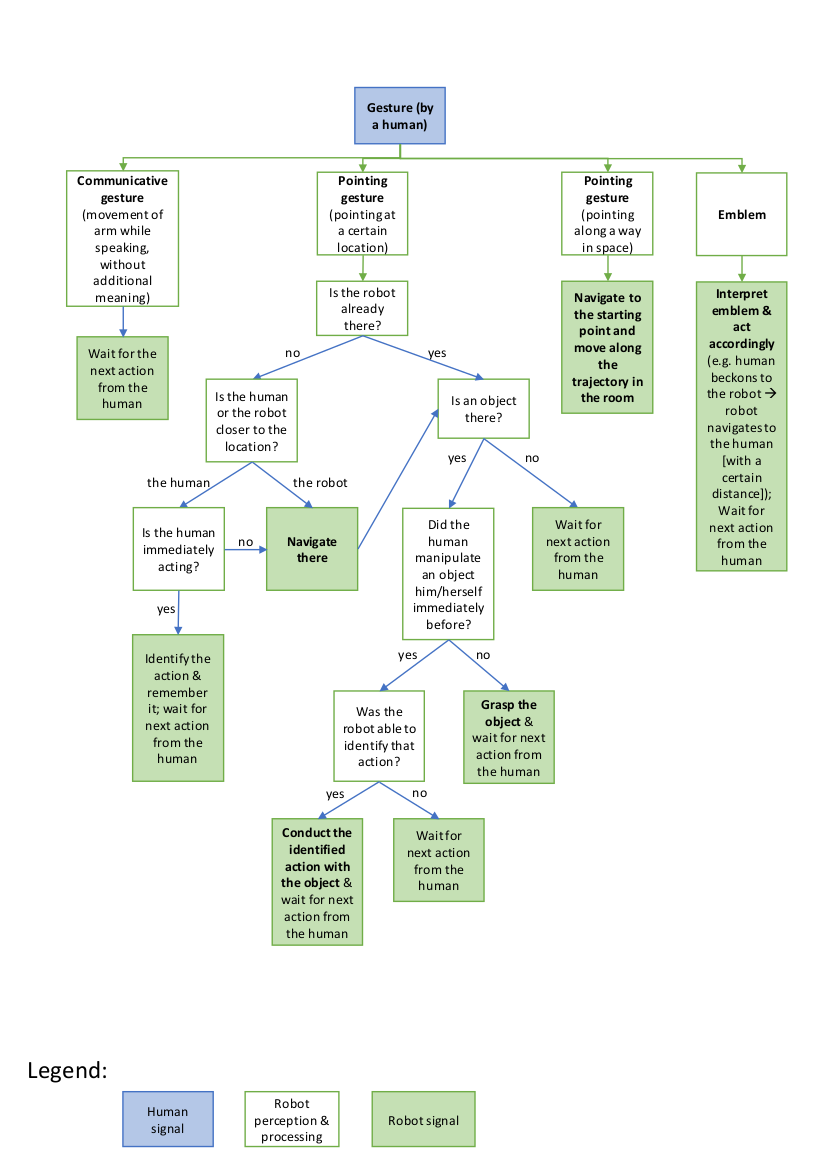

An example of a decision tree on how a robot reacts to human gestures in a collaborative scenario. When the system recognizes a human gesture, it needs to identify the type of gesture and then act accordingly.

Example 1 of nonverbal behavior implemented on the UR10 in the simulation environment URSIM. The robot directs its attention to the human. Potential context: the robot has just finished a task and is now waiting for the human to proceed. (code)

Example 2 of nonverbal behavior implemented on the UR10 in the simulation environment URSIM. The robot beckons to the human and then directs its attention to the human. Potential context: the robot has just finished a task and beckons to the human for the human to proceed with the task. (code)

Example 3 of nonverbal behavior implemented on the UR10 in the simulation environment URSIM. The robot points at a certain location in the room and then directs its attention to the human. Potential context: the robot signals the human to bring an object from a certain location in the room. (code)

Research staff

Sponsor

Vienna Science and Technology Fund

NEXT – New Exciting Transfer Projects 2019

- Duration

2019 to 2021 - Coordinator

OFAI - Sponsor

Vienna Science and Technology Fund

- Contact

Stephanie Gross