OFAI is proud to present "Is it Language or Task Design? Reinterpreting language models' recent successes in morphology and syntax learning", a talk by Jordan Kodner of Stony Brook University, NY. The talk is part of OFAI's 2024 Spring Lecture Series.

Members of the public are cordially invited to attend the talk online via Zoom on Wednesday, 24 April 2024 at 18:30 CEST (UTC+2):

URL: https://us06web.zoom.us/j/84282442460?pwd=NHVhQnJXOVdZTWtNcWNRQllaQWFnQT09

Meeting ID: 842 8244 2460

Passcode: 678868

You can add this event to your calendar.

Talk abstract: The success of neural language models (LMs) on a wide range of language-related tasks may be in part due to their ability to induce human-like representations or understanding of natural language grammars. Humans are, after all, gold-standard language learners. For the past several years, researchers pursuing this question have developed a number of methodologies for testing the grammar representations learned by LMs that have reached generally positive conclusions. I will take a critical look at such studies in this talk. While modern LMs are clearly extremely impressive, and clearly do often capture important aspects of natural language grammars, the methodologies of many popular studies have unfairly overestimated the capacities of LMs when it comes to their ability to induce human-like representations. Focusing on questions of hierarchical syntactic representations and generalization in inflectional morphology, I will discuss how unintended biases in data-splitting, artificial training or test data, overly simplistic evaluations, weak or absent baselines, and faulty interpretations, have conspired to overestimate the abilities of LMs. While the conclusions of this study are largely negative in terms of the current state-of-affairs, they are also optimistic. By employing more thorough and rigorous methodologies, we have developed a better scientific understanding of the nature of LMs and representations of the grammar. In identifying weak points for current models, we points towards research areas where greater improvements may be gained.

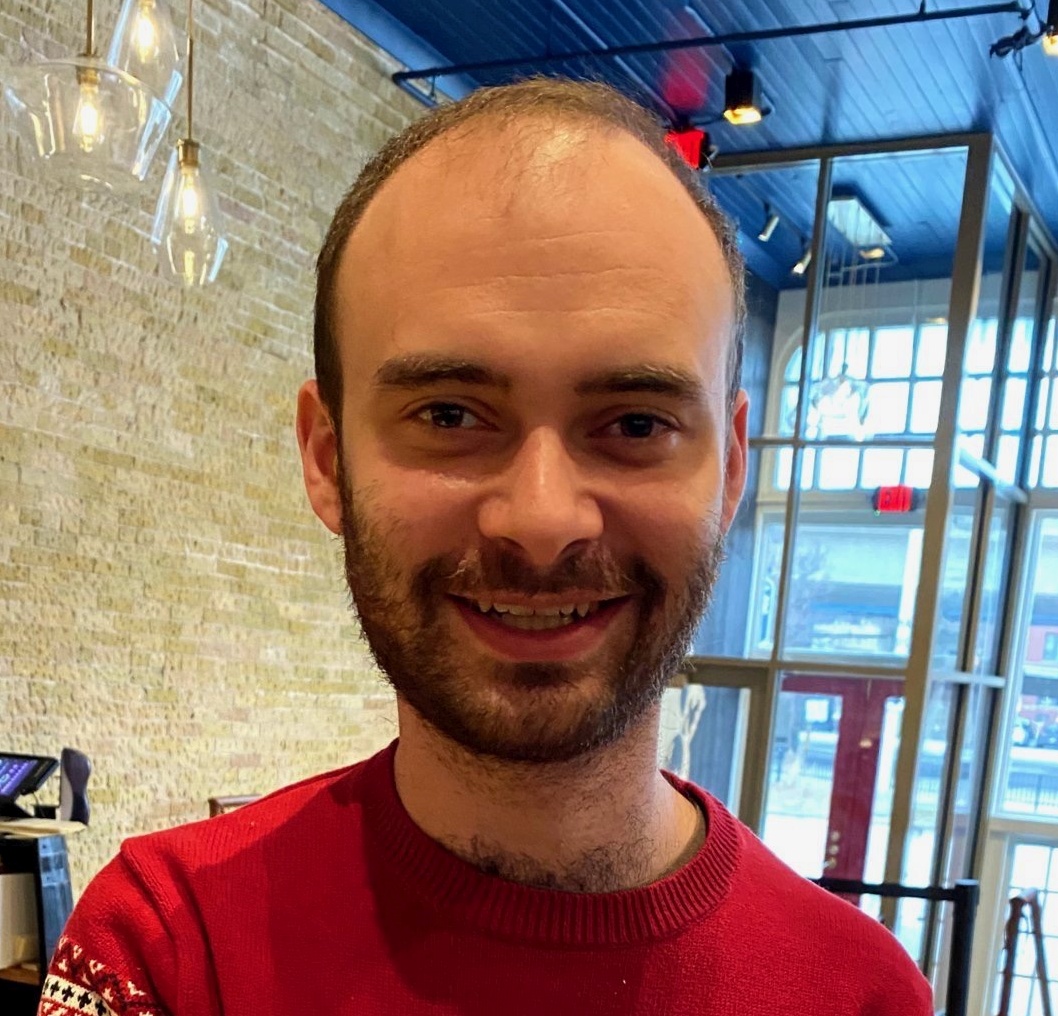

Speaker biography: Jordan Kodner is an Assistant Professor in the Stony Brook University Department of Linguistics and an affiliate of the Institute for Advanced Computational Science and Natural Language Processing group. His primary research revolves around computational approaches to child language acquisition and their broader implications. In particular, algorithmic models of grammar acquisition, especially morphology, how those processes drive language variation and change, what insights they provide for low-resource NLP, and what they tell us about the intersection of (low-resource) NLP and cognitive science. In 2020, he received his PhD from the University of Pennsylvania Department of Linguistics, where he worked with Charles Yang and Mitch Marcus. Prior to that, he received a master's degree from the University of Pennsylvania Department of Computer and Information Science in 2018. From 2013 through 2015, he was an Associate Scientist in the Speech, Language, and Multimedia group at Raytheon BBN Technologies where he worked on defense and medical-related projects.